Yin, Arturo, and I have started implementing a tap-inspired musical shoe. Right now we’re working on a single shoe, with 5 force sensitive resistors (FSRs) on the bottom designed to capture nuances of a foot fall and translate them into sounds (either sampled or synthesized, we’re not sure yet.)

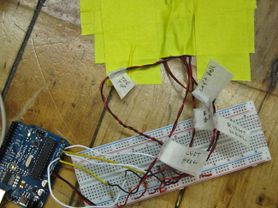

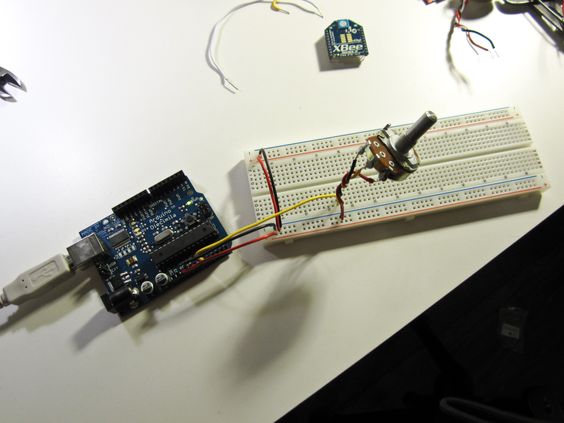

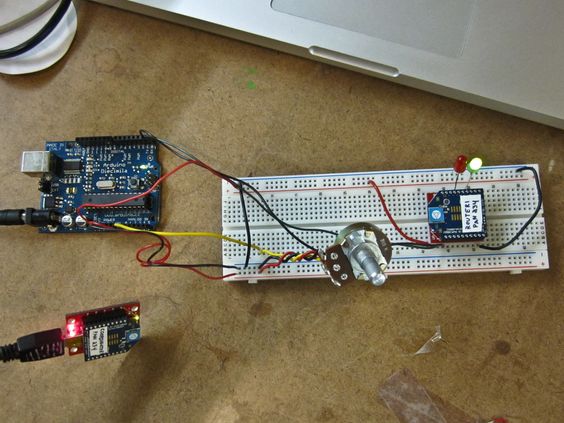

Here’s how the system looks so far:

On the bottom of the shoe, there’s a layer of thin foam designed to protect the FSRs and evenly distribute the weight of the wearer. We ran into some trouble with the foam tearing on cracks in the floor, so we’re looking for a way to improve durability or maybe laminate something over the foam to protect it.

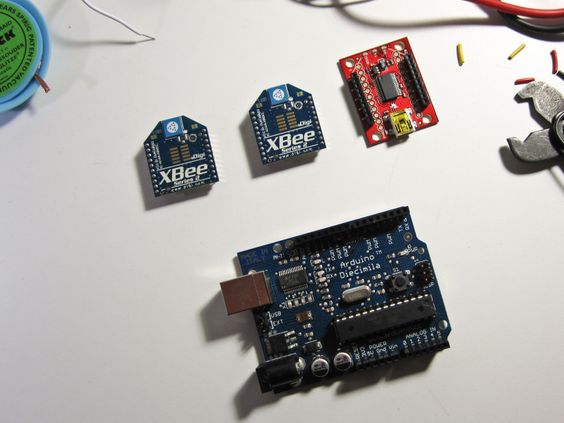

The challenge now is to find a way to work with all of the data coming in from each foot… how best to map the FSR’s response to the wearer’s movements in an intuitive and transparent way. On technical fronts, we’re going to need to make the system wireless so the wearer will have freedom of movement, and find a way to route the sensor wires in a more subtle way. There are also concerns about the long-term durability of the FSRs, so we might need to make them easily replaceable. This could be tricky since each sensor is buried under foam and tape…

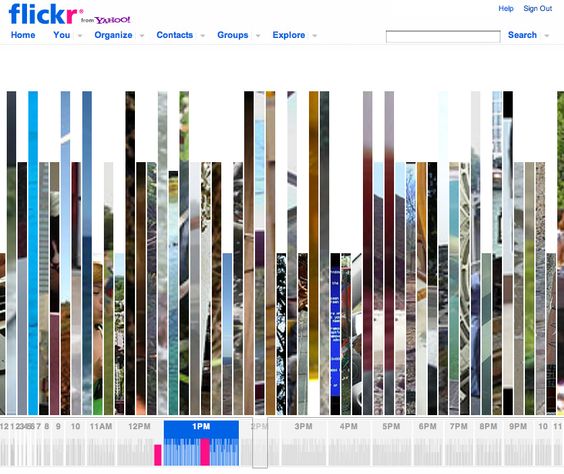

We’ve written some very basic code for now, just enough to get the signal from each FSR and graph the response in Processing.

Here’s the Arduino code:

// Multi-channel analog serial reader.

// Adapted from "sensor reader".

// reads whichever pins are specified in the sensor pin array

// and sends them out to serial in a period-delimited format.

// Read the inputs from the following pins.

int sensorPins[] = { 0, 1, 2, 3, 4, 5};

// Specify the length of the sensorPins array.

int sensorCount = 6;

void setup() {

// Configure the serial connection:

Serial.begin(9600);

}

void loop() {

// Loop through all the sensor pins, and send

// their values out to serial.

for (int i = 0; i < sensorCount; i++) {

// Send the value from the sensor out over serial.

Serial.print(analogRead(sensorPins[i]), DEC);

if (i < (sensorCount - 1)) {

// Separate each value with a period.

Serial.print(".");

}

else {

// If it's the last sensor, skip the

// period and send a line feed instead.

Serial.println();

}

}

// Optionally, let the ADC settle.

// I skip this, but if you're feeling supersitious...

// delay(10);

}And the Processing code:

// Multi-channel serial scope.

// Takes a string of period-delimited analog values (0-1023) from the serial

// port and graphs each channel.

// Import the Processing serial library.

import processing.serial.*;

// Create a variable to hold the serial port.

Serial myPort;

int graphXPos;

void setup() {

// Change the size to whatever you like, the

// graphs will scale appropriately.

size(1200,512);

// List all the available serial ports.

println(Serial.list());

// Initialize the serial port.

// The port at index 0 is usually the right one though you might

// need to change this based on the list printed above.

myPort = new Serial(this, Serial.list()[0], 9600);

// Read bytes into a buffer until you get a linefeed (ASCII 10):

myPort.bufferUntil('\n');

// Set the graph line color

stroke(0);

}

void draw() {

// Nothing to do here.

}

void serialEvent(Serial myPort) {

// Read the serial buffer.

String myString = myPort.readStringUntil('\n');

// Make sure you have some bytes worth reading.

if (myString != null) {

// Make sure there's no white space around the serial string.

myString = trim(myString);

// Turn the string into an array, using the period as a delimiter.

int sensors[] = int(split(myString, '.'));

// Find out how many sensors we're working with.

int sensorCount = sensors.length;

// Again, make sure we're working with a full package of data.

if (sensorCount > 1) {

// Loop through each sensor value, and draw a graph for each.

for(int i = 0; i < sensorCount; i++) {

// Set the offset based on which channel we're drawing.

int channelXPos = graphXPos + (i * (width / sensorCount));

// Map the value from the sensor to fit the height of the window.

int sensorValue = round(map(sensors[i], 0, 1024, 0, height));

// Draw a line to represent the sensor value.

line(channelXPos, height, channelXPos, height - sensorValue);

}

// At the edge of the screen, go back to the beginning:

if (graphXPos >= (width / sensorCount)) {

// Reset the X position.

graphXPos = 0;

// Clear the screen.

background(255);

}

else {

// Increment the horizontal position for the next reading.

graphXPos++;

}

}

}

}