Arturo, Julio, Tamar and myself put together a storyboard for our Communications Lab narrative video. Click above for a semi-decipherable full-res version.

Danger Mouse

Eric MikaIf it’s not a prank, this mouse has to be the most chilling example thus far in support of dictatorship over direct democracy in matters of design.

The open source community has delivered millions of lines of code to the world, and I love it dearly for this. But the movement seems completely devoid of aesthetic sensibility or restraint. Even the Ubuntu project, which tries so hard to get usability right (and does, to some extent) still serves up a watery approximation of Windows and an excess of configuration options.

Maybe things will improve as more non-coders learn to code. (Or maybe this will just do to code what the open-source developers did to design.)

(Via Daring Fireball.)

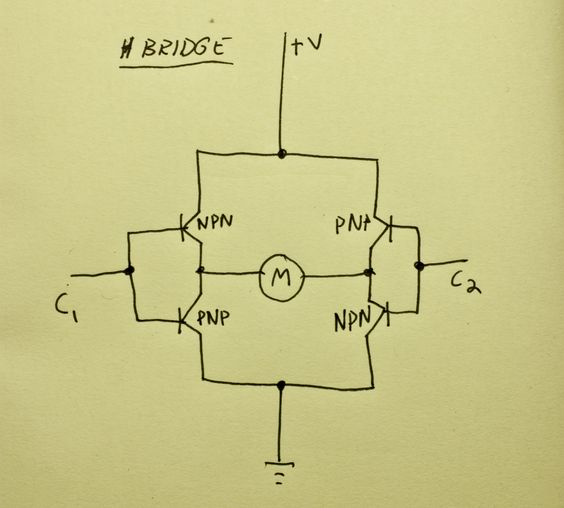

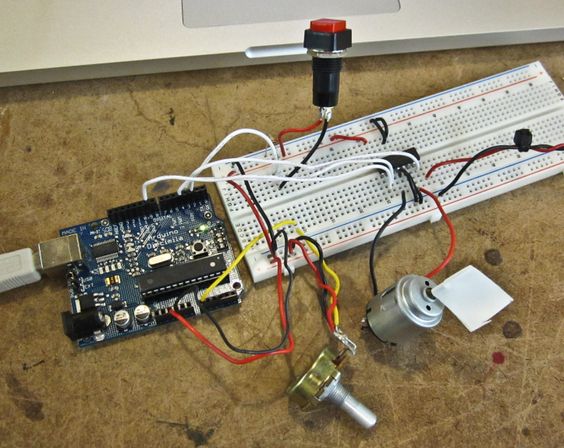

Lab: DC Motor Control

Eric MikaThis week’s lab covered DC motor control with an H-bridge, an IC that allows to control a motor’s direction. Applying power to C1 sends power through the motor in one direction, while power to C2 sends power through the other way. Setting both C1 and C2 to high will have a braking effect on the motor.

I added a potentiometer to the lab’s circuit to allow speed control. The pot controlled a PWM signal going to the H-bridge’s enable pin. Power to the motor came courtesy of a 9V wall wart.

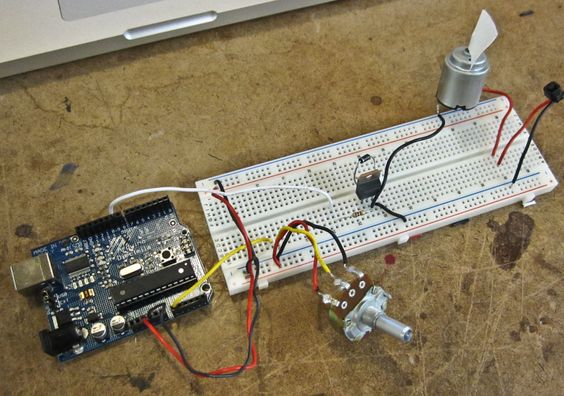

Lab: Transistors

Eric MikaThe transistor lab explains how to control a higher voltage circuit with a lower voltage one. In this case, I used the Arduino and a transistor to start and stop a DC motor running on a separate 9V power source.

The circuit looks like this. The diode across the transistor prevents voltage from flowing back through the circuit while the motor (…now a generator) spins down after power is cut.

And a little code will set the motor starting and stopping. I initially tried to run the motor on the Arduino’s 5V power supply, but as soon as it turned the motor on the Arduino would brown out and reset. Hooking up an external 9V supply fixed this. (However, judging from the frantic sounds the motor produced, 9V might have been more than it wanted.)

Using a potentiometer to control a PWM signal to the transistor allowed for speed control:

Rich 3D Modeling: Scrounging up Data

Eric MikaGoogle Earth’s take on the scene sums up the problems with the state of 3D representations of our environment. Since the Brooklyn Bridge enjoys celebrity status, it gets lovingly hand-modeled either by Google or crowd-sourced obsessives. But the rest of the scene is literally flat. Street View isn’t exactly 3D, but it shows some of the other data Google has on hand that might go into improving the quality of the ground-level experience in Google Earth. The Grand Theft Auto IV screenshot provides some calibration as to how much detail current hardware can render in real time — and although much of the scene is fictional, it highlights the details Google misses, and highlight the fact that the bottleneck to an improved model is data rather than processing power.

So where is this data going to come from? How are we going to fill in the gaps between items that are epic enough to fuss over by hand (like the bridge) and the aerial photography that’s supposed to pass for a backdrop? Where are the cars and the people and the telephone wires that humbly contribute to our sense of place?

I’m not sure the answer to these questions are being pursued very rigorously, but there are a couple of approaches to populating the current, rather sparse 3D representations of the world.

Google’s recently released Building Maker makes it easier to add buildings, but it won’t let you draw a mailbox or a tree.

In lieu of crowd-sourcing, a site called UpNext created a (most likely) hand-built 3D approximation of Manhattan, that’s more navigable (but not necessarily more detailed) at street-level than Google Earth’s take on the same space.

A low-budget means of building 3D models can be found in Friedrich Kirschner’s ink scanning project. A cheap camera takes a series of photographs as an object (or person) is submerged into an opaque liquid. The photographic slices are then reconstituted to create a three dimensional point cloud. Not necessarily scalable, but an interesting example of simple and cheap technology doing the work of much more expensive LIDAR or industrial-grade 3D scanning systems.

Ogle is a legally unsustainable but nevertheless interesting approach to extracting existing 3D data from closed systems. It basically scrapes OpenGL data after it’s been rendered into standard 3D model formats.

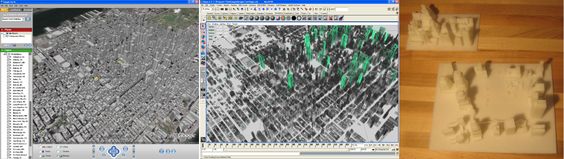

Here it is extracting data from Google Earth for 3D printing:

A few papers from the 2008 3DPVT conference also show promise in improving the models we have, or building new ones more easily.

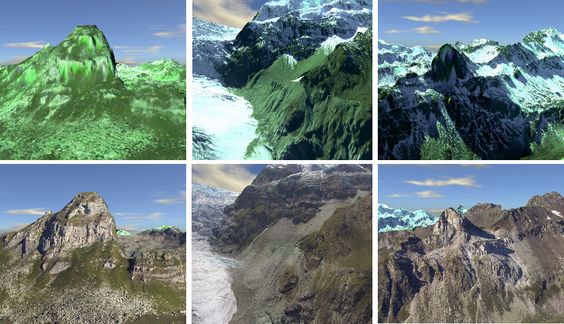

Martin Schneider and Reinhard Klein’s paper on improving texturing of height map images by merging several orthographic photographs of the same terrain. Could be of use to Google since they have access to ideal source images. The technique is not necessarily applicable to urban areas, but could improve models of rural areas.

The top row shows a region textured without the technique, the bottom row shows the same region with the improved texturing proposed in the paper.