I was particularly interested in the discussion of bullet time last week — such a surreal way to traverse a moment.

On an individual basis, executing the effect itself is now in reach of DIYers. The rig used to shoot the following was built by the Graffiti Research Lab for $8000 in 2008.

The end-point for the glut of earth-centric images and data on the web seems to be a whole-earth snapshot, representing both the present moment any and desired point in (digitally sentient) history. Could we build a navigable world-wide instant if we had enough photos? Could the process be automated? Things like Street View certainly generate an abundance of photographs, but they’re all displaced in time.

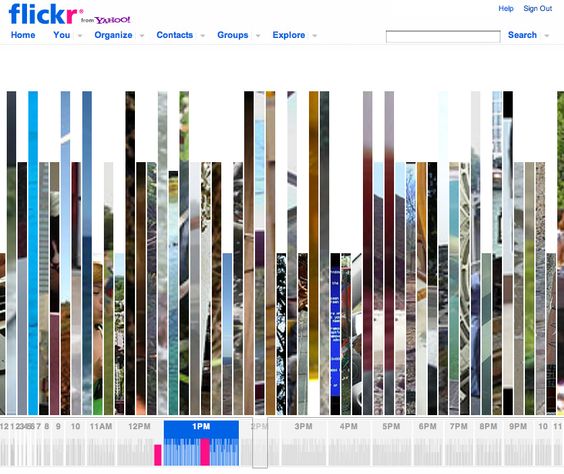

I searched pretty thoroughly and was surprised by how few efforts have been made to synchronize photographs in time (though plenty of effort has been made on the geographic space front.) Flickr has put together a clock of sorts. It’s interesting, but it only spans a day’s time and doesn’t layer multiple images on a current moment.

Still, Flickr’s a great source for huge volumes of photos all taken at the same instant. (Another 5,728 posted in the last minute.)

I wanted to see if the beginnings of this world-scale bullet-time snapshot could be constructed using publicly available tools, so I set out to write a little app via the Flickr API to grab all of the photos taken at a particular second. With this, it’s conceivable (though not yet true in practice) that we could build a global bullet-time view (of sorts) of any moment.

~I ran into some grief with the Flickr API, it doesn’t seem to allow second-level search granularity, although seconds data is definitely in their databases. So as an alternative I went for uploaded date, where seconds data is available through the API (at least for the most recent items.)~

2022 Update: Upon revisiting the source code, it’s now possible to find matching photo capture times instead of just using the upload times. The latest version of the algorithm identifies collisions if:

- The capture times match down to the second.

- The photo’s owner is different from those already in the collision collection (to keep the results interesting).

- The photos’ capture time and upload time don’t match (based on minutes and seconds matching, to deal with the lack of time zone > consistency between these fields).

- The captures times have a high confidence value according to Flickr’s data.

Kudos to Flickr for keeping their API working with almost no breaking changes for more than a decade.

Here’s a rough cut:

This could be taken even further if the search was narrowed to those images with geotag metadata. With enough of that data, you could construct a worldwide snapshot of any given second with spatial mapping, bringing us closer still to the whole-earth snapshot.

Some efforts have been made to represent the real time flow of data, but they generally map very few items to the current moment, and don’t allow navigation to past moments. For example:

Update: Here’s the reason Flickr’s API refused to give me all the photos within the bounds of a second: “A tag, for instance, is considered a limiting agent as are user defined min_date_taken and min_date_upload parameters — If no limiting factor is passed we return only photos added in the last 12 hours (though we may extend the limit in the future).”